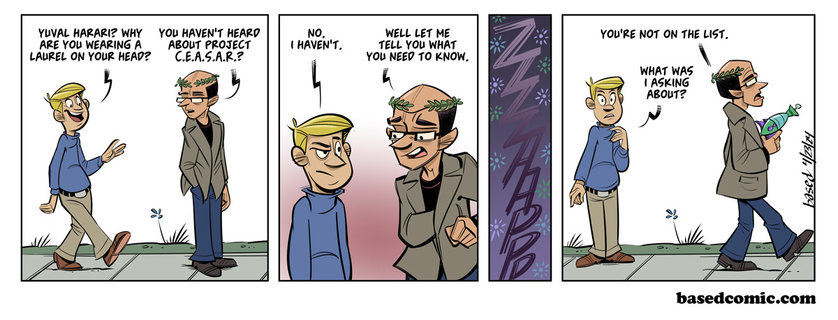

Haven't heard of the Center for Existential Risk? Like Madonna says, maybe Yuval Harari doesn't think you're coming to the future. If you want to hear him tell you all about it, it's on video. Another elite agency formed to convince us AI is such an existential threat we need one world government to combat it.

When Harari began his major media campaign to warn us about the dangers of AI, conservative pundits were surprised to find they agreed with him (they were surprised they would agree with him on anything, ever). I warned that Harari’s motives wouldn’t be to our benefit. He revealed them a few weeks ago in a tweet and expanded on them during his acceptance of a role in the University of Cambridge Centre for the Study of Existential Risk.

He devotes an entire chapter of Homo Deus to the thesis that the only thing that separates us from other species to make us more successful is our ability to cooperate. Cooperation might separate us from chimp tribes but it doesn’t separate us from AI, which is why he’s convinced AI will be acknowledged as both sentient and deserving of rights. Against an AI that has as many rights as we do and is also smarter than we are, cooperation is the only solution, right?

Cooperation in the form of a one world government.

I’m recording a video for tomorrow on the subject.

The Chance Pin Giveaway

A pin is going into the mail today for the paid subscriber who contacted us with his address information. If you are a paid subscriber who hasn’t given us your address, get in touch anytime!

For free subscribers, entries into the giveaway continue through May 17.

Game Streams

I would normally give more of a heads up on Notes and Locals before we go live but we came back from dinner cutting it very close to the scheduled time. Still figuring all of this out and there might not be another until next week, Chance has a thing.